Exploring Artificial Intelligence and Glaucoma: Challenges, Opportunities, and Hope

Featuring

Atalie C. Thompson, MD, MPH

Vice Chair of Learning Health Systems, Department of Surgical Ophthalmology at Atrium Health Wake Forest Baptist

Atalie C. Thompson, MD, MPH

Vice Chair of Learning Health Systems, Department of Surgical Ophthalmology at Atrium Health Wake Forest Baptist

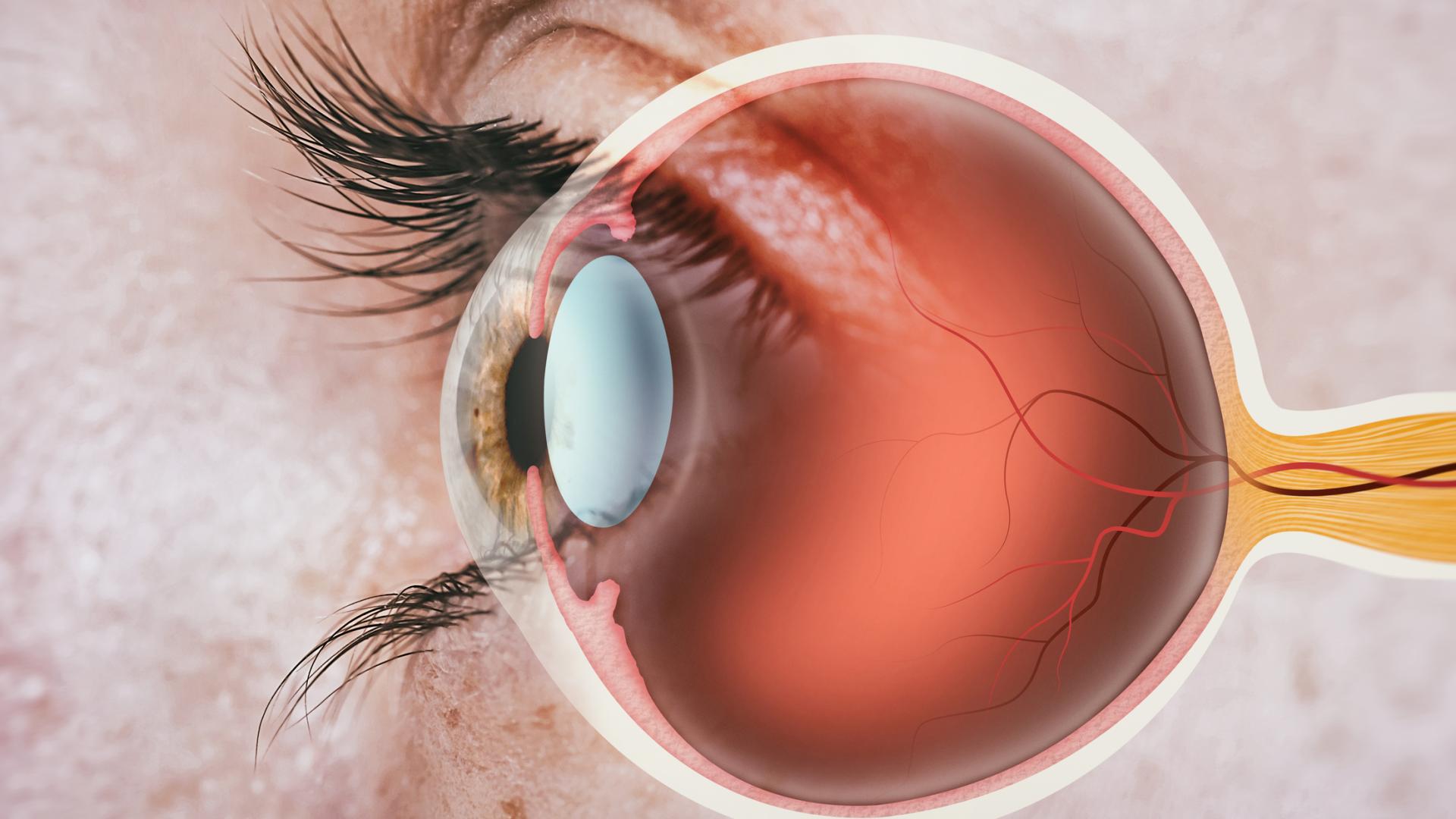

Can artificial intelligence reimagine the way we detect and diagnose glaucoma? Discover the innovative ways scientists and healthcare professionals are leveraging this technology (and the challenges that lie ahead) with expert glaucoma specialists Dr. Atalie Thompson and guest moderator, Dr. Jullia Rosdahl.

Atalie Carina Thompson, MD, MPH completed her undergraduate studies at Harvard College, masters degree at the University of California, Berkeley School of Public Health, and medical degree at Stanford. She graduated from ophthalmology residency and glaucoma fellowship at Duke University, where she was a recipient of the Heed Ophthalmic Foundation Fellowship. She is currently a clinician-scientist at the Atrium Health Wake Forest Baptist Medical Center where she studies the development and application of deep learning algorithms for glaucoma screening and investigates age-related changes in visual function and ophthalmic structure in older adult populations.

Jullia A. Rosdahl, MD, PhD, is a board-certified and fellowship-trained glaucoma specialist at the Duke Eye Center. After completing her doctoral work on retinal ganglion cell biology during her MD-PhD at Case Western Reserve University in Cleveland, Ohio, she is working to save as many retinal ganglion cells as possible and preserving sight for her glaucoma patients as an Associate Professor of Ophthalmology at Duke University.

Jullia A. Rosdahl, MD, PhD, is a board-certified and fellowship-trained glaucoma specialist at the Duke Eye Center. After completing her doctoral work on retinal ganglion cell biology during her MD-PhD at Case Western Reserve University in Cleveland, Ohio, she is working to save as many retinal ganglion cells as possible and preserving sight for her glaucoma patients as an Associate Professor of Ophthalmology at Duke University.

MS. KACI BAEZ: Hello, and welcome to today’s BrightFocus Glaucoma Chat. My name is Kaci Baez, VP of Integrated Marketing and Communications at BrightFocus Foundation, and I am so excited to be here with you today as we discuss “Exploring Artificial Intelligence and Glaucoma: Challenges, Opportunities, and Hope.” Our Glaucoma Chats are a monthly program, in partnership with the American Glaucoma Society, designed to provide people living with glaucoma and the family and friends who support them with information straight from the experts. BrightFocus is committed to investing in bold research worldwide that generates novel approaches, diagnostic tools, and life-enhancing treatments that serve all populations in the fight against age-related brain and vision diseases. Now, I would like to introduce today’s guest moderator, Dr. Jullia A. Rosdahl, who will interview our guest expert. Dr. Rosdahl is a board-certified and fellowship-trained glaucoma specialist at the Duke Eye Center. After completing her doctoral work on retinal ganglion cell biology during her MD/PhD at Case Western Reserve University in Cleveland, she is working to save as many retinal ganglion cells as possible and preserving sight for her glaucoma patients as an associate professor of ophthalmology at Duke University. Welcome, Dr. Rosdahl.

DR. JULLIA ROSDAHL: Thank you so much, Kaci. It is such a pleasure to be on the call today. I’m so excited about the conversation that I get to have with Dr. Atalie Thompson. Dr. Thompson is someone that I met during her training, and she is just fantastic, and she’s going to be talking to us about, or talking with me for all of you about the topic of artificial intelligence. So, let me introduce her. Atalie Carina Thompson, MD, MPH, completed her undergraduate studies at Harvard College; her master’s degree at the University of California, Berkeley; and her medical degree at Stanford University. And she graduated from the ophthalmology residency program and glaucoma fellowship with us at Duke, where she was a fantastic resident and fellow, and it’s a pleasure to see where she’s gone on after that. And she’s currently a clinician–scientist at the Atrium Health Wake Forest Baptist Medical Center, where she studies the development and application of deep-learning algorithms for glaucoma and other age-related eye diseases and investigates age-related changes in visual function and ophthalmic structure in the older adult population. And she’s actually joining us live—not from North Carolina, where the both of us live—but from Seattle, where she’s at one of the biggest eye science conferences in the world, ARVO, which stands for Association for Research in Vision and Ophthalmology. And so, I’m so excited to have you on the call. Welcome, Dr. Thompson.

DR. ATALIE THOMPSON: Thank you so much for having me, Jullia. I’m really excited to be on this Chat today.

DR. JULLIA ROSDAHL: All right. Well, let’s just start us off with kind of some basics. That’s where I certainly feel I am with artificial intelligence. Can you just start us off by explaining what it is? What’s AI, what’s artificial intelligence?

DR. ATALIE THOMPSON: That’s a great question, so artificial intelligence, if you break it down, the word intelligence is usually something we think about when we talk about humans, because human beings have intelligence. And so, artificial intelligence is when a computer is kind of mimicking human-level intelligence. So in AI, or artificial intelligence, computers are trained to analyze data so that they can perform complex tasks that would ordinarily require human intelligence, like learning or problem solving or language acquisition. And that’s why it’s called artificial intelligence.

DR. JULLIA ROSDAHL: Great. And can you share some examples of how we are using this in our lives right now?

DR. ATALIE THOMPSON: So, AI has kind of taken off recently, and it’s really prevalent, and you may not even realize that you’re using AI. But most people have smartphones these days, and smartphones have a lot of artificial intelligence worked into them. You may have a virtual assistant on your phone, like Siri, that you can talk to, and Siri can understand if you say, “Oh, I want to call my mom.” Siri will look at your contacts and then call your mom’s number. And Siri is just an algorithm in your iPhone that can do something for you when you talk to it. And then also, if you go online and you want to get help for some tasks, and you’re going on some company’s website, a lot of times you’re talking to an AI-assisted bot instead of actually talking to a real person first, because they’ve developed some algorithm that can recognize some keywords or common questions that many people have and provide a response before you get to that second level where you actually need to talk to a customer service representative. In people’s houses, a lot of people have different devices, like Google Home or Alexa. I know my son really likes to talk to Google and say like, “Hey, Google, make the sound of a fire truck,” or “Make a sound of a tiger,” or “Play Feliz Navidad.” And Google recognizes my son’s toddler-level speech now because Google gets smarter over time by hearing his speech pattern over and over again. And that’s one thing about these algorithms, that they can get a little bit smarter over time the more they’re exposed to new data. And now, it can actually play Feliz Navidad if my son asks for it, whereas initially, it probably didn’t understand what my son was asking for. And so, a lot of people use these types of things in their house. They might tell Alexa to turn off the lights, and the reason that Alexa can do that is because Alexa is using AI.

DR. JULLIA ROSDAHL: Well, that sounds really exciting, powerful, and, of course, a little bit scary, too. So with regards to ophthalmology and eye disease, seems like there’s a lot of potential there. So, what research is being done with AI to improve the detection of age-related eye diseases, like the one we care about a lot—glaucoma—as well as others that are really important, like macular degeneration or diabetic retinopathy?

DR. ATALIE THOMPSON: Yeah, there’s a lot of work that’s being done. So basically, the field of AI has been able to advance a lot in the last 5 to 10 years because our computers have gotten a lot more powerful. Back in the day, basically when I was a child, computers took up a lot of space. And the original computers took up entire rooms in order to really even do some very simple tasks, but because of microchips and the advances in technology, now computers can be very powerful and not take up quite as much space. And more recently, because they have become so powerful, they can analyze larger amounts of data, and that can be applied to more complex data sets. And so in ophthalmology, our data sets are really complex because we’re talking about health record data in some types of algorithms, or we’re looking at imaging, and these are kind of more complex types of data. And so, there’s been a lot of interest in developing AI algorithms that can be useful to clinicians because they could either detect disease earlier or maybe predict who’s going to progress or respond to treatment better than a person could by just looking at a scan. And so, age-related eye diseases have had a lot of advances, and they have had an FDA-approved AI device in ophthalmology, but the first one was IDx-DR. And you’ve probably heard of that, Jullia, but IDx-DR was kind of a huge advance in AI for ophthalmology, and it was developed mainly for diabetics. So, diabetics are supposed to be able to get an annual dilated eye exam every year to screen for diabetic retinopathy, but there are so many more diabetics than there are eye providers. And even though in some situations there’s access to teleretinal imaging where you can get a photo of the eye, someone still has to actually look at that photo and decide, does this person have diabetic retinopathy? Do they need to go see an eye provider? That’s just become not feasible with the way our population has grown in terms of the number of older adults that have more and more eye diseases or have diabetes versus the number of providers that we have. So, IDx-DR is an AI algorithm that was developed to fit this clinical need by being able to interpret a retinal image and tell the provider if this person has diabetic retinopathy. And that platform was developed on a specific device, so it’s been FDA-approved for the actual device that takes the photo and that that algorithm is used to seeing, and it could be utilized potentially in non-ophthalmic settings, so like a primary care doctor’s office, or it could be used maybe in a lower resource setting or in a community-based setting so that people who have diabetes could get their screening, but they don’t have to necessarily see an eye doctor unless that algorithm looks at the picture and determines that there’s something concerning enough, like diabetic retinopathy, that would warrant referral. So, that’s like a good example of where AI has sort of started to take off in ability to refer people for an eye disease and maybe bridge a gap in care that we might have because we have too many people that need screening and not enough providers to do that screening.

DR. JULLIA ROSDAHL: Oh, I’m just thinking that a lot of people don’t need to see an eye doctor if it’s all normal, so it’s kind of saving a lot of hassle for those patients, too.

DR. ATALIE THOMPSON: Right, because there’s a lot of diabetics that don’t have diabetic retinopathy. And so, if they had access to IDx-DR and their primary care doctor’s office could get a photo of their eye, it would tell them if they have to see an eye provider or not. And if they don’t, then that’s great. They can just get the photo the following year. And so, there’s hope that we could develop similar kinds of algorithms for other eye diseases, like macular degeneration and glaucoma, which are also very common and prevalent in older adults. And so, of course, one goal is to try to diagnose disease at an earlier stage on lower-cost imaging, like color photographs, and that’s because that kind of imaging is probably less expensive and a little more accessible, especially in non-ophthalmic settings. When you go to see the eye doctor, you’re going to get something big, full workup, including optical coherence tomography, which is a much more expensive and detailed picture of the eye on a microscopic level so we can look at those layers of the retina and the optic nerve for any evidence of the damage. You might get visual field testing, where they’re checking your peripheral vision using a machine. And those are all great things to get done, but you can’t do that in a lot of settings outside of ophthalmology. So, something like an algorithm that could detect or screen for eye disease earlier might be able to be more accessible in primary care or in lower-resource regions of the world where they don’t have the money to have fancy equipment like OCT or visual field.

DR. JULLIA ROSDAHL: Yeah.

DR. ATALIE THOMPSON: And in addition to that, there’s hope that it could be applied in other kinds of diseases, too, that maybe have very high risk of blindness but aren’t as prevalent, like uveitis, or I know there’s some interesting projects being done in trachoma, which is more common in the third world, and you could take an iPhone picture of the external eye to look for trachoma, and then an algorithm that could maybe interpret that photo taken on an iPhone rather than having to have all those people get a physical exam in person with someone that can examine them for trachoma. So, that’s one way AI is, I think, taking off. And then there’s also a desire to not just be able to screen people but also in clinical practice maybe predict who’s likely to get worse, who’s likely to respond to treatment. I don’t think we’ve gotten quite as far in those types of algorithms because those are a little more complicated, but there’s been a lot of interest in doing that in a lot of different eye diseases, including the three that we just talked about.

DR. JULLIA ROSDAHL: Yeah. It’s exciting to hear what is already out there and what’s coming and what people are working on for the future. It seems like, you know, this would be a really great way to help see more patients, get more people taken care of and connected with the doctors that they need to see when they need to see them. Coming back to glaucoma, I want to hear more about the potential for AI to help us with glaucoma since we do know that many people with glaucoma don’t know they have it, and early diagnosis and treatment for glaucoma is so important for preventing blindness. Can you tell us more about how AI might be helping us there?

DR. ATALIE THOMPSON: Absolutely. Just like you said, glaucoma is kind of complicated because patients don’t realize they have glaucoma until the disease is really advanced. And then another problem that we’re faced with is a lot of patients aren’t motivated to take their medication because they can’t feel their glaucoma, they can’t tell if it’s getting worse. And that’s because glaucoma is really slowly progressive, and so people don’t feel that their glaucoma is changing. And it also makes it hard for us as physicians because it’s hard for us to predict who’s going to get worse or how quickly are people getting worse. And so, the hope here is that AI algorithms could not only help us develop some technology for detecting who has glaucoma earlier, like maybe years before they lose their vision so that they could get treatment earlier. And that’s one goal, right? So, that could be maybe applied at a telehealth setting where they get a picture remotely and then it tells them. Just like IDx-DR tells you whether or not you have diabetic retinopathy, there could be a similar algorithm that would tell you, “Here’s a color photo of your optic nerve. You look suspicious for glaucoma, and so you should see an eye provider within 3 months,” and get them hooked up for a referral. And I think that’s one big goal that a lot of people have been working on with AI and glaucoma.

On the flip side of that, once you have glaucoma and you’re starting to see your clinician in clinical practice, there’s a lot of pieces of data that go into helping to figure out how fast is somebody progressing, how likely are they to get worse? And so, AI could potentially be less subjective and help the provider have a tool to incorporate the multiple pieces of data and decide, do they think this person is at really high risk of progressing? Should they be a little more aggressive in their care, or try to get them a slightly tighter eye-pressure goal? And if these algorithms can be fast and efficient, they could maybe improve workflow in clinics, so then doctors could spend more time talking to their patients, or maybe even seeing more patients, and less time trying to sort through like five or six pieces of data to decide what’s happening with the patient in terms of their risk of progression. So just as an example, there’s a lot of interest in developing algorithms that could interpret both visual field data and optical coherence tomography data, which is that image we take of the optic nerve and macula. And if that could be done in real-world practice and give a provider some kind of progression risk, or this person is very likely to have blindness in a certain amount of time if IOP doesn’t meet this threshold, that would be powerful if–we’re not there yet, but that’s something that maybe could be done in the future. And potentially, you could incorporate other pieces of information from that clinic visit, like their eye pressure or their corneal thickness as well. And I think that information would help a patient to know why it’s important to take their eye drops if they could see this algorithm is taking this information and providing a risk score for them. Hopefully, that would help inform that conversation that they have with their doctor and just motivate them and to realize how important it is to continue to take their eye drops and follow up regularly as scheduled.

DR. JULLIA ROSDAHL: Yeah, I think that it helps people so much to be able to see why we are thinking what we’re thinking at those glaucoma visits. It’s sometimes hard to explain why, “Oh, this little spot on the visual field means this to me, and this is why I want to do X, Y, or Z.” And so, I think having these additional tools sound really wonderful for the physician side, as well as I think that they really would be for the patient side, too.

DR. ATALIE THOMPSON: Yeah, like if you could say, “Look, here’s your visual field today. But in 5 years or in 2 years, this is what your visual field is going to look like if we don’t slow this disease down, get you on board with the treatment plan, you know, get the treatment under control, get a good regimen.” That’d be really helpful for the patient to realize that because it’s so hard for them to see a change in their vision, but they will notice it once it gets more advanced, and we’re trying to prevent them from getting to that advanced stage.

DR. JULLIA ROSDAHL: Yeah, I know right now we kind of have that visual field progression analysis, which is just a line graph that might show that future progression line angling down, but if we had images, we could show them about what their vision might be like. I think that would be potentially really, really powerful. Well, thanks for doing this work. So, my next question is the one that I think all of my patients with glaucoma who have vision loss ask me about any new treatment or any new technology, is whether or not it can prevent blindness or reverse vision loss from glaucoma. Do you think that’s something AI would be able to do?

DR. ATALIE THOMPSON: So, I think if these algorithms are successfully developed and implemented, and if we’re able to detect glaucoma earlier in people that didn’t know they had glaucoma, years before they have vision loss—like if they were at a community screening event, and they got picked up by an algorithm—then by referring them for treatment and getting treatment sooner, that would prevent blindness, because we know we have effective treatments. Similarly, if we had good risk-prediction tools to use in clinic with our patients, and if that motivated people to understand better their eye disease potential progression risk if they’re not taking their drops and then they were more compliant, then I think that will decrease their risk of blindness. Of course, I don’t have any knowledge, unfortunately, Jullia, having been at ARVO, I haven’t seen anything about any new technology that’s going to reverse blindness that you’ve already had or reverse vision loss that’s already happened from glaucoma. And that’s because that retinal ganglion cell, which is the cell that dies in glaucoma, it’s not something that we can resurrect from the dead, and it’s connected all the way from your retina all the way through your brain. And so, one of the reasons why we can’t probably bring back vision loss from glaucoma is because that nerve that’s related to glaucoma that got damaged and that has died, it’s all the way connected through your visual system in the brain, so we’d have to be able to regenerate parts of the brain as well. And that’s just very complicated because of all the ways nerves connect to each other and connect to other parts of the brain. I have no new insights on that, and that’s why we’re so adamantly concerned about picking up glaucoma early and treating it appropriately in clinic once we have patients diagnosed with glaucoma.

DR. JULLIA ROSDAHL: Yeah, so maybe pairing AI with some other advances to have some kind of goggles that you can use with cameras and then some kinds of probes or something to your brain. I don’t know if the Star Trek goggles … we can resurrect those and pair them with AI and get anywhere with that. But back down to Earth here. So, you mentioned some of the ways that we could potentially use AI to personalize glaucoma management with managing a patient’s particular specific data and helping interpret it and prognosticate about what could happen in the future. I wonder about other ways of bringing in things that are really specific to an individual patient, like maybe genetic contributions, environmental factors, those sorts of things. Do you think AI could help us with that?

DR. ATALIE THOMPSON: Well, I think, yes. AI has huge potential just because it can be used to train algorithms that can learn from really complicated and large data. And genomic data, human genome, has been sequenced, and there’s a lot of interesting and exciting work being done with genomic data now across a lot of different fields of medicine. So, that kind of complicated type of data, which has a lot of data points and would be kind of specific to different individuals or types of individuals, could potentially be harnessed in AI and used to help us better understand probably who’s at risk for glaucoma and whose genes are going to be more likely to be turned on than others. And as you know, environmental factors are often at play and why people’s genes get expressed and why they don’t, so most forms of glaucoma have a pretty strong genetic component, but it’s not as black and white—like if my mom has glaucoma, every person in my family got glaucoma. We might all have that genomic risk within our genome, but whether or not it gets expressed could be related to certain environmental factors and we don’t really know a lot about what those are. So, I think for AI to be able to maybe start to look at big data sets like EHR-type data or large national survey data where people self-report various environmental risk factors in those types of data sets and connect those to genomic data, I think that could be done. It would be very powerful. I don’t think that’s been done, but it’s certainly something that’s possible. And hopefully, that could help us better understand also in the future not just who’s at risk for getting glaucoma, but maybe we could connect it to different types of eye drops and whether or not you’re going to be more likely to respond to one type of eye drop versus another, and really personalize those medical choices that we make with patients when we’re trying to pick a management plan for them.

DR. JULLIA ROSDAHL: Yeah, I think that question comes up a lot in clinic especially when the first drop hasn’t worked, and the patient is saying, “Oh gosh, do we have to keep trying them until we find one that works?” And then the answer is “Yes, that’s what we need to do.” And it would be nice if we had a faster way to pick something that would be more targeted and tolerable.

DR. ATALIE THOMPSON: Right, and I think, again, right now it’s just like Russian roulette right now. We’re like, “We’ll try this one, and then we’ll kind of move on to the next one,” and it’s all trial and error. But I think there is this possibility that if we better understood people’s environmental factors and their genetics, that we could better understand what kinds of patients are going to respond to which kinds of treatments better.

DR. JULLIA ROSDAHL: Yeah. So, there are clearly really amazing things happening already and potential with AI and ophthalmology and glaucoma, but what do you think some of the limitations and challenges are?

DR. ATALIE THOMPSON: So, like any new technology, there’s a lot of work to be done. And so, one challenge is that we don’t always understand or know how an AI algorithm makes its decisions or predictions, and that can lead to some difficulties when we’re trying to interpret the prediction or the output. And this is commonly called the “black box” phenomenon. So, sometimes AI algorithms use labeled data, so like a doctor might look at pictures of the optic nerve and say, “This looks like glaucoma,” so they label it as glaucoma, and “This one doesn’t look like glaucoma,” and they label it as not having glaucoma. And in those kinds of algorithms, the AI algorithm learns from data that’s been supervised by a human being, so it’s called supervised learning. But there’s also the potential for algorithms to just look at data without any labels on it at all and pick up patterns in the data that, many times, even humans have not been able to pick up and then make predictions. And that’s called unsupervised learning, and it’s working with data that’s not labeled. And so, many times, we don’t really understand or know how it’s making those predictions, and that can lead to some concern among physicians or scientists just to make sure that the predictions are being made on something that’s biologically plausible. So, I think that’s a big thing about AI that sometimes gives people pause, is like, well, this thing made a prediction, but how do we know that that prediction is true or accurate or that it’s based on something that we think makes biological sense.

And also, I think, like you had mentioned earlier, a lot of people can get concerned when they hear about how AI seems like it’s everywhere, and they start to worry that maybe AI is going to take over peoples’ jobs or start to replace humans or replace doctors from the workforce. I think that’s pretty unlikely to occur in ophthalmology or in medicine, in general. And in these cases, it’s really just the AI algorithms are being developed to help as a tool to assist doctors, hopefully improve their efficiency—like when reviewing image in clinics, for example—or maybe increase access to care in places where people wouldn’t otherwise be able to get that care, or also, like I said, meet those gaps where we don’t have enough providers to see all the people in the world to screen them. And the algorithm could be a first step, and then people would still go on to see a provider if they need to see one. And already, AI is being used in radiology. Breast imagers use AI to help pick up suspicious breast lesions that the radiologist may have missed, because we’re human, so we’re not perfect. And of course, AI algorithms aren’t perfect either, but if you have a human do a pass and an AI algorithm do a pass, and there’s something that maybe the radiologist missed, that algorithm can flag it, and then they can take a closer look and see if they think that is a suspicious area for breast cancer. And that’s a good example where it’s already being done and it’s pretty well integrated into the clinical practice. So, I think in ophthalmology we could have similar kinds of tools that assist doctors.

There’s other issues, though, because unlike radiology all of the imaging in radiology has been standardized, so what that means is that everybody is getting the same kind of image, and it’s being output and stored in the same kind of way. And so, there’s not all these different companies with very different devices that get similar kinds of images but have their own proprietary software. In ophthalmology, we have different companies making different OCT machines, different color fundus machines. And the way those pictures get taken and processed is actually subtly different enough that an AI algorithm that’s trained on one machine and is used to seeing the types of images from one machine may not perform well when it’s used in a different setting on similar types of images but from a different machine. So, you can get an OCT on a Heidelberg or an OCT on a Zeiss or an OCTD on a Topcon, and those images are similar, but they’re different enough that the algorithm can get a little bit tripped up, and that’s just because these algorithms are only as smart as the data that they’ve seen. So, in real-world clinical practice, as you know, even within one hospital, they have one type of device, or different clinics might have different devices, and so your algorithm might not be able to be used in all the different clinics unless it’s seen data from all those different types of devices, the real-world data from different devices. It’s hard to get data sets that have enough imaging on all the different kinds of devices to really make sure it’s generalizable. Of course, earlier algorithms that were trained were trained on data that was from clinical trials. Well, in a clinical trial, first of all, the imaging tends to all be from one type of device, so they perform well in those clinical trials, and also those patients are seen at regular intervals, like they’re seen exactly 6 months apart and they get the same type of quality of image done every time, and it’s always being done by the same research imager, so there’s just these little things like that that affect the quality of the imaging that makes the image quality really high in the clinical trial. In real-world practice, a different person might take a picture of your eye each time you go to the doctor. That interval between visits for doctors could be variable because it’s based on what your eye disease is doing, right? So, you might tell them, “I’m going to probably come back at 3 months,” and then next time you might say, “6 months,” and the next you’re going to be like, “Oh, I’m going to do 9 months.” So, it could be variable, and so there’s not this consistency in terms of the imaging in terms of how often it’s being done. And also, the quality might be not quite as high as a clinical trial. So that means that we really need to be training algorithms on real-world data. And there was kind of a big session on AI on Saturday here at ARVO, and that was a big theme as well. It’s like, you know, the real-world data and real-world translation for AI is still not there. And that’s because we need to be able to have access to clinical imaging data sets that have all these factors at play—image quality being more variable, the timing of visits being more variable, and also people getting lost to follow up for periods of time and then coming back. And building such data sets is a little bit expensive, pretty time consuming, and complicated. And then sharing data across institutions has become complicated as well because of HIPAA and data privacy rules. So, those are some bigger issues in AI, in general, that make it more challenging when it comes time to getting those into clinical practice.

And another issue in glaucoma, in particular, we have some more challenges, and I know that this is the Glaucoma Chat, so, you know, in retina practice, if you have macular degeneration or diabetic retinopathy, you can basically tell based on a photo whether it’s a color photo or an OCT of the retina. So, it’s a structural diagnosis. It’s just based on structure, but glaucoma is not a purely structural diagnosis. It requires interpretation of a lot of variables. We have imaging of the nerve, but we also have visual field data, we have eye pressure, corneal thickness, and so we have more variables at play when we try to make judgments about whether or not someone has glaucoma and whether or not their glaucoma is getting worse. And then, of course, lots of other optic nerve diseases can mimic glaucoma. So sometimes, we also are looking at neuroimaging of the brain and getting labs to make sure that they don’t have some other diagnosis. And so, glaucoma is a little bit tricky to kind of come up with a one-stop shop picture that can predict glaucoma or predict glaucoma progression because of these other variables we’re looking at, and glaucoma is a more complex disease to diagnose. And that’s—Jullia—why we went into glaucoma because we like a good challenge. And then, some people … there’s a lot of racial variation in the optic nerve, and I think there’s been a growing appreciation that a lot of the data sets that people have created aren’t very racially or ethnically diverse. And so, some people have very tilted optic nerves, like if somebody has myopia—and that’s much more common in Asia—they can have a very tilted nerve, and that looks different. It could be hard to pick up glaucoma in those kinds of nerves. If you’re Black or African American, it’s much more common to have a larger optic nerve, and you can have an appearance of a large cup-to-disk ratio, which is one of the things we look at in glaucoma diagnosis, but they may not have any damage, and it might be that their tissue is being spread out over a larger surface area. And so, they can look glaucomatous, but it turns out if you look at the optical coherence tomography and you look at the retinal fiber layer, they actually don’t have glaucoma; they just have a physiologically large optic disc cup. And then, other people have really small nerves, so they have the opposite problem. They don’t have a big cup-to-disc ratio, and their nerves are really small, but they actually do have damage, and it can be hard to pick that up because of the small nerve size. And a lot of those variations are differentially distributed across different racial and ethnic groups. And there’s really been more of an interest and push to try and develop data sets and collaborate to get data sets that have more racial and ethnic diversity in them to approach these problems because you don’t want to develop a data set in Caucasians and then try to apply it in Asians, and you’re just going to get different performance than what you should be getting, because the Asian optic nerve, in general, looks very different from maybe a Caucasian optic nerve. And I think there’s more appreciation that these data sets … we don’t want them to be biased; we want them to be something that we can apply in populations of color and also in different racial and ethnic groups because they have different ways that their eye appears at baseline. This is complicated, you know? That’s complicated to build those data sets. It takes a lot of collaboration and time.

DR. JULLIA ROSDAHL: Yeah, I’m really glad that you brought up the racial and ethnic variations. That was the question that I was just thinking about some of the AI controversies with imaging modalities for facial recognition, that there were really difficulties with different pigment colors that people had in their skin picking up different faces differentially, so I appreciate you bringing that up. I think, certainly, it just really solidifies the need for lots of participation in clinical trials and in research and in building these databases and including all the people that are that are potentially affected. So right now, you’re at ARVO out there in Seattle. You mentioned one of the big AI symposia that you had on Saturday, and so I wonder if there’s anything else that you might have gleaned from this meeting. I don’t know how many thousands of eye scientists are there and talking about things, but I know ARVO is just a fantastic gathering, so many posters, so many talks, so much collaboration and sharing of knowledge there. So, what have what have you seen? What are you excited about?

DR. ATALIE THOMPSON: Yeah. So, I think that particular session had a lot of different topics that were covered. There was kind of a big push to discuss: What are we going to do when it comes time to try and implement these in the clinical practice? Because in reality, even though we have some FDA-approved devices—IDx-DR being the first one—they’re not really being adopted widely. And so, we want to develop technology that’s user-friendly and that has a market so that it makes it into actual clinical practice. And in order to do that, we have to think about, is it cost effective, is it something that the infrastructure that exists already can be utilized or do we have to bring in all new infrastructure? Because that’s a lot bigger ask for a health care system, right? If the health care system’s invested in these three different OCT machines or this color photo machine, they didn’t want to be told, “Well, you’re going to have to buy a whole new machine that just works with my special algorithm.” And that’s what gets back to trying to develop things that also are generalizable that could be applied maybe in different kinds of settings, or would work with multiple different kinds of devices. So, I think that’s really something that’s going to be a challenge, but it’s good that people are having those discussions. I did see an interesting poster. I think it was about the use of electronic health record data. I think there’s been a lot more interest in trying to use electronic health record data because there’s a lot of it. Now, it’s limited because it’s not really been designed for research purposes—it’s designed for billing purposes—but there’s a lot of things we could learn about it. And one of the studies I saw was about using this national registry of electronic health record data called All of Us Research Program, where data was contributed by a bunch of different sites across the country. And what they tried to do is see if there were other things—like, are there other diagnosis codes or labs or biometric data, like heart rate, weight, and height—that kind of stuff. Does any of that predict who’s more likely to get glaucoma? Could a tool be used within the EHR system to develop an algorithm that says, “Hey, this person probably needs to be screened for glaucoma.” And I think that’s a great question to ask, because that data exists, and maybe that could be easily implemented into an existing electronic health record data system without costing a lot of money. And what they found, which was kind of interesting, was that things like body mass index, which is related to your height and weight, so your BMI, your heart rate, and your hemoglobin A1C. were actually predictive of whether or not you were likely to get a glaucoma diagnosis. And so, I thought that was really interesting. I don’t often think about A1C. I do sometimes think about BMI, but I never thought about heart rate when thinking about glaucoma. And I don’t think we understand necessarily why those might be related, but this is a preliminary type of look, and I think it also opens up any questions about what kinds of things might contribute to whether or not people get glaucoma. But developing a tool that could be used in the EHR, I think, is a really great idea. So, I think that was kind of something that was kind of interesting and exciting, and I think the use of EHR data is kind of a newer idea. I’ve been working, too, with a group of several institutions to do some work to get around some privacy issues and data sharing issues with AI using federated learning. And the idea there is that the data could stay at the local institution, but the algorithm could access the data from each institution where it’s at, train at each institution, and then cross-train without having to move thousands of pictures or thousands of data points. And I think that’s great. If that ends up working out and we’re able to kind of implement federated learning, that will be a way of making more powerful, larger data sets that involve data from many, many institutions without having to have those institutions move their data, which would be great.

DR. JULLIA ROSDAHL: Yeah, all those things sound so, so fantastic. The idea of really bringing AI into clinical practice in a really practical way to implement it for us. I loved, too, that poster you talked about with using the EHR to help us in different ways. That’s really interesting to think about those more systemic metrics and what they tell us about glaucoma. Yeah, and like research is prone to do, you ask one question, you get an answer, but also it opens the door to even more questions that you get curious about. This is just so fun to talk to you and to think about these things. I think that we just have time for maybe one question, so I’d love you to put on your dreamer goggles for a moment and just tell us: What do you think the future of glaucoma would look like if advances in AI really became incorporated into our everyday practice even more?

DR. ATALIE THOMPSON: So, I mean, I think AI isn’t going to replace doctors, like I said, but I definitely think if we can get tools that are feasible, that can be implemented and used, and that are quick and easy, this could help support us in caring for patients—either by making our care more efficient or by expanding access to care, especially in parts of the world where there’s not going to be an ophthalmologist there to do every single eye exam. And parts of the world that are like that are also our own United States. Lots of people live in areas where they just don’t have an ophthalmologist for, like, 100 miles. So, I think I would really, you know, I’m a glaucoma doctor, so I would really love to see glaucoma screening in non-ophthalmic settings become a reality. And I think AI could get us there, because I think it could make it feasible to screen and automatically refer people using telehealth platforms that take a photo remotely, tell us that somebody’s at risk for glaucoma, and provide that referral so that doctors don’t have to grade all those images. The algorithm could just provide the referral, and maybe we could get it to a point where it connects them to a specific provider. So, I would love for that to happen. And I think it would also be great if we had some more decision-support tools in glaucoma care. There’s more glaucoma patients than there are glaucoma doctors. The waitlist to see glaucoma doctors can be very long, and time is of the essence with glaucoma. We want to get people treated earlier. So, I think if we could have ways to see patients and increase our efficiency in clinics and maybe incorporate multiple data points that we’re collecting to give us better risk stratification tools, help us to inform patients about their risk for glaucoma progression, that would be really powerful. So, I think that would help guide our discussions with patients and help us provide better care to patients. So, that would be awesome. If we could do those two things, I’d be happy.

DR. JULLIA ROSDAHL: Yeah, I agree with you. Those two things sound really great—getting people diagnosed and into our care and then taking the best care of them, then we can. Thank you so much. I’ve had such a nice time talking with you and getting a little bird’s-eye view from ARVO and from your experience. I’m going to turn the conversation back over to Kaci from BrightFocus to close this out.

DR. ATALIE THOMPSON: Thank you. Thank you for having me.

MS. KACI BAEZ: Thank you so much, Dr. Thompson and Dr. Rosdahl, for joining us today, such an insightful discussion. And at BrightFocus, we’re also funding AI in glaucoma research, as well. And there are new developments that just seem to unfold every day, and it is very exciting to just hear more about it, so thank you, again. And to our listeners, thank you so much for joining our Glaucoma Chat. We hope that you found it helpful. And thank you so much for joining us, and I hope everyone also is having a great time at the ARVO conference. We have a number of folks from BrightFocus there, as well. So, such a great discussion today. There is a wealth of information on our website at www.BrightFocus.org/glaucoma, as well as free bilingual resources. Our next Glaucoma Chat will be on Childhood Glaucoma and will take place on Wednesday, June 12, and we hope you can join us then. And thank you so much for joining us today, and this concludes our BrightFocus Glaucoma Chat.

BrightFocus Foundation is a premier global nonprofit funder of research to defeat Alzheimer’s, macular degeneration, and glaucoma. Since its inception more than 50 years ago, BrightFocus and its flagship research programs—Alzheimer’s Disease Research, Macular Degeneration Research, and National Glaucoma Research—has awarded more than $300 million in research grants to scientists around the world, catalyzing thousands of scientific breakthroughs, life-enhancing treatments, and diagnostic tools. We also share the latest research findings, expert information, and resources to empower the millions impacted by these devastating diseases. Learn more at brightfocus.org.

Disclaimer: The information provided here is a public service of BrightFocus Foundation and is not intended to constitute medical advice. Please consult your physician for personalized medical, dietary, and/or exercise advice. Any medications or supplements should only be taken under medical supervision. BrightFocus Foundation does not endorse any medical products or therapies.

In this chat, Dr. Poonam Misra addresses some of the most common questions listeners have shared over the past year—from treatment options and lifestyle considerations to the latest educational resources.

Dr. Astrid Werner explains what causes dry eye, how to recognize the symptoms, and effective treatment options—including preservative-free drops, artificial tears, and eyelid care routines.

Join us for a fascinating conversation with Dr. Lucy Q. Shen as we explore cutting-edge research into restoring vision loss from glaucoma.

Preparing ahead of time can help you best manage your vision health. Here are some questions you can take along when you visit the eye doctor.

Support Groundbreaking Glaucoma Research

Your support helps fund critical research that could prevent vision loss, provide valuable information to the public, and cure this sight-stealing disease.

Donate Today